Proxet answers the call to experiment: Using LLMs to create API calls

Chat GPT and other Large Language Models (LLMs) like it are set to transform business again — by offering a compelling solution to the common challenge of creating API calls.

API calls — one application requesting data from another — require extensive custom coding such as ETL pipelines, integrations, and custom workflows — the “glue code” — that contributes to the complexity and cost of enterprise solutions.

LLMs signal a new era in software development, where the need for glue code could be substantially reduced or even eliminated. This shift is not about reducing the dependency on data engineers, but about easier access to sophisticated API interactions.

Here are multiple reasons for using an LLM when to successfully create API calls:

- Simplify Complex Tasks: LLMs can interpret and simplify complex API documentation, making it easier to understand how to interact with a particular API. This is useful for developers who may not be familiar with the specific API.

- Generate Code: LLMs can generate code snippets based on your requirements. This means you can describe what you want to do in plain language, and the LLM can produce the corresponding API call syntax.

- Reduce Errors: By generating API calls, LLMs can help reduce human error. This is especially useful in cases where API calls are complex and prone to typos or syntax errors.

- Educate the User: For those new to APIs or a particular technology, working with an LLM can be a learning tool - helping to understand how API calls are structured and how they work.

- Offer Flexibility and Customization: An LLM can tailor the API calls based on the specific parameters and authentication methods provided. This means you can get more customized and efficient API interactions.

- Assist in Integrations: LLMs can assist in understanding how to integrate different APIs by providing examples and explanations - making it easier to combine multiple services in your application. This might be helpful to either speed up work of a seasoned engineer, or help a beginner engineer with their execution.

- Provide Up-to-date Information: Plugging the LLM to the web allows us to have up to date information about the APIs from the documentation of services.

- Support Cross-languages: LLMs potentially can generate API calls in multiple programming languages, making it easier to integrate into different types of projects.

- Help Debug: LLMs can also assist in debugging API calls by suggesting potential causes of common errors and solutions.

Proxet’s experiment

Our goal: an LLM which receives a request “Create <service name> API call to complete <task description>” and responds with the code to complete the task and all the necessary comments.

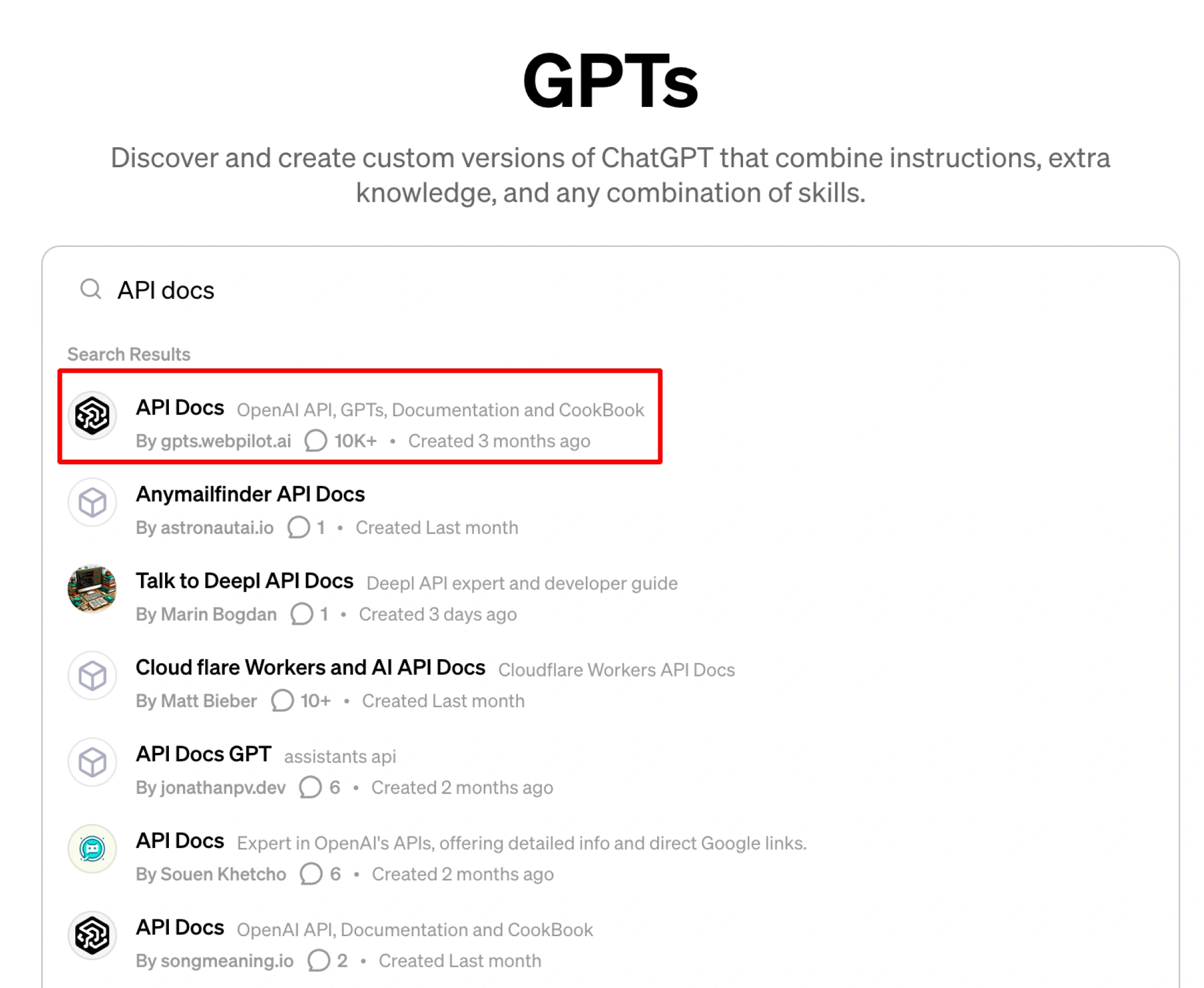

Our starting point: OpenAI’s GPT Store has custom ChatGPTs tailored for miscellaneous tasks. Here is what is available in GPT Store if we type “API” into the search:

Our experiment’s attempt: Trying the most suitable ChatGPT for our task. The first candidate is API, calling itself “An API expert, offering technical advice and examples.”

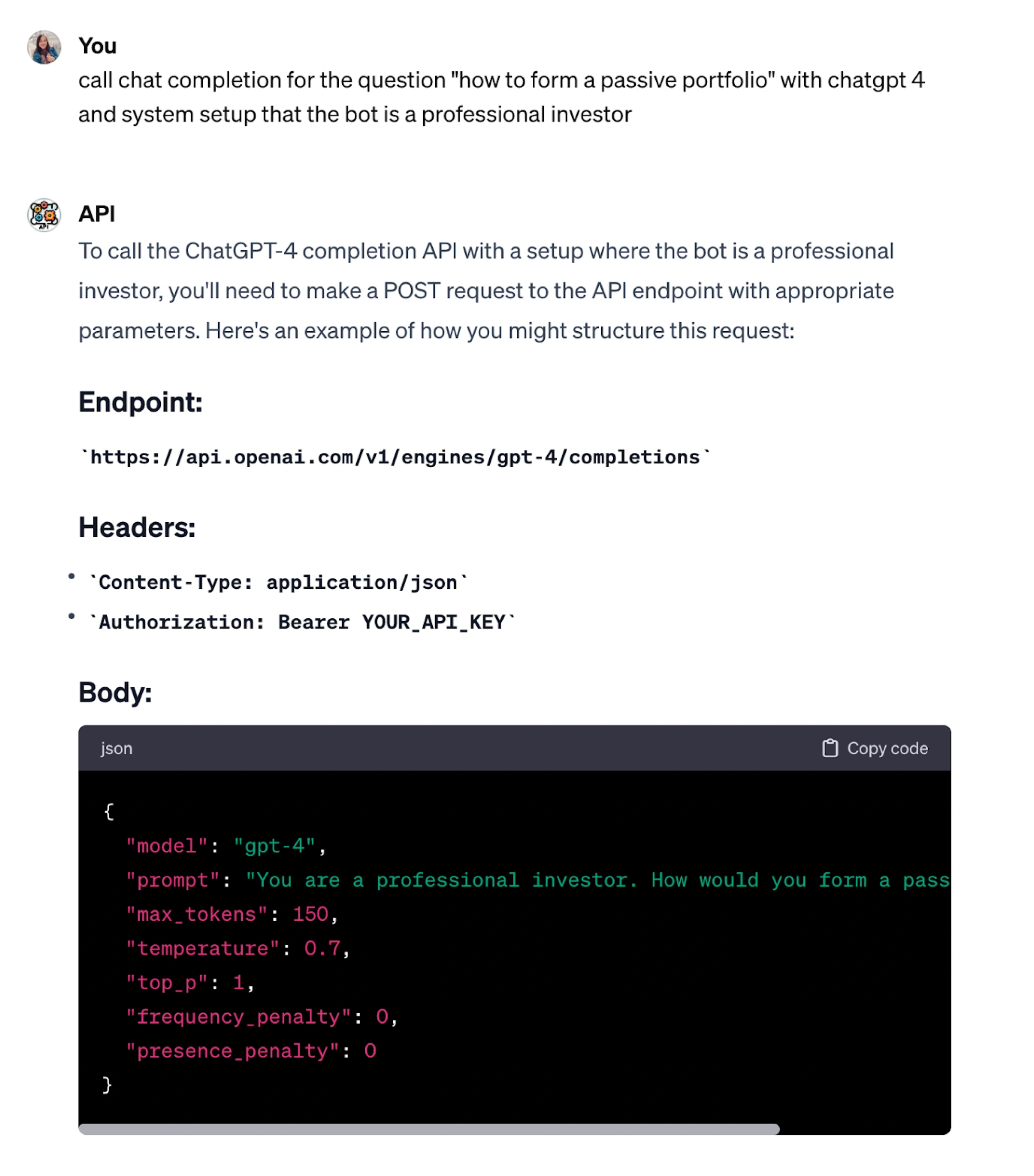

Our query was:

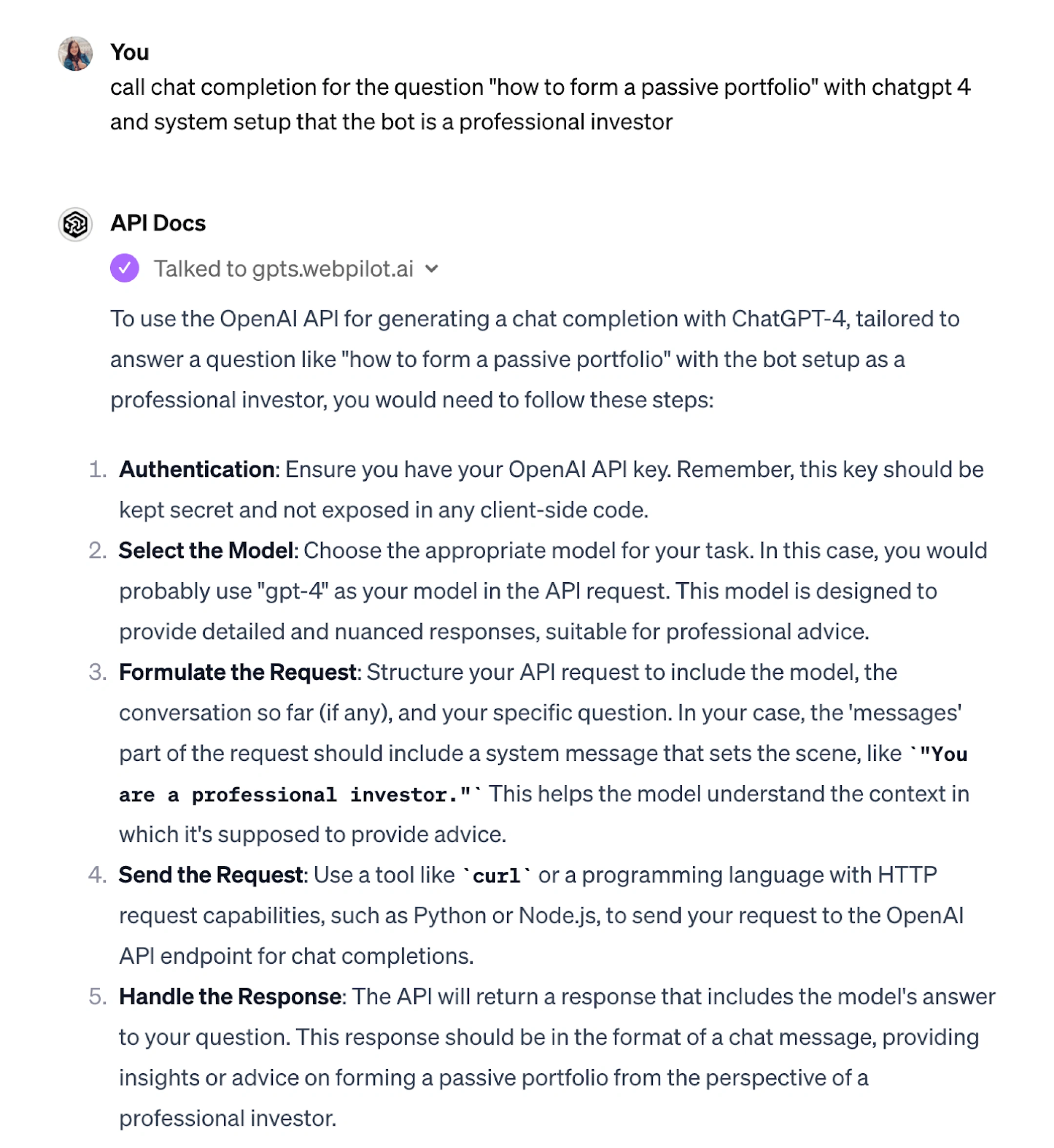

call chat completion for the question "how to form a passive portfolio" with chatgpt 4 and system setup that the bot is a professional investor

Here were the results:

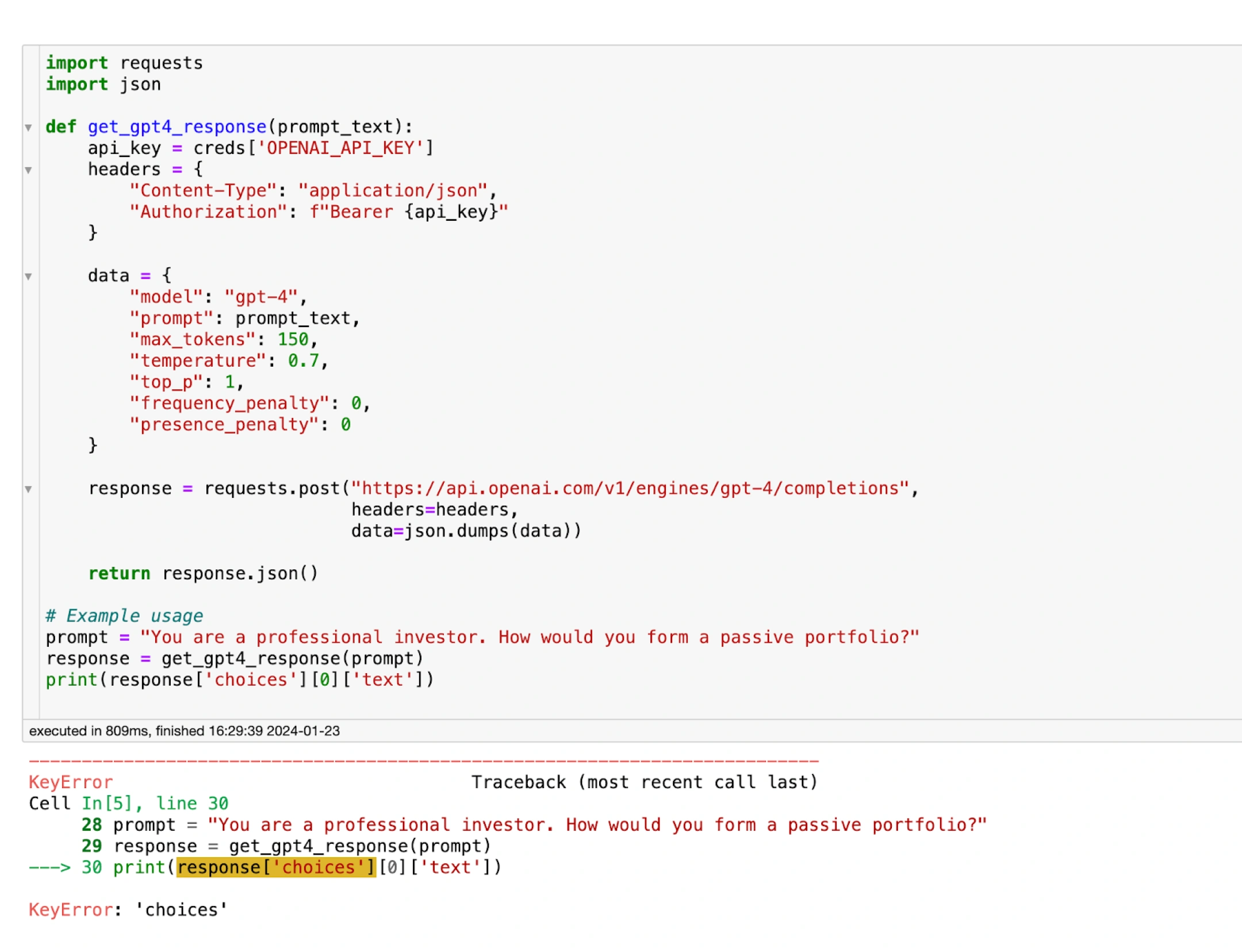

Let’s move this code to Python and check whether it works:

Our evaluation: There is an error. The code doesn’t work because the URL and the arguments to the request are incorrect. Not having access “under the hood,” we can’t say why it didn’t work.

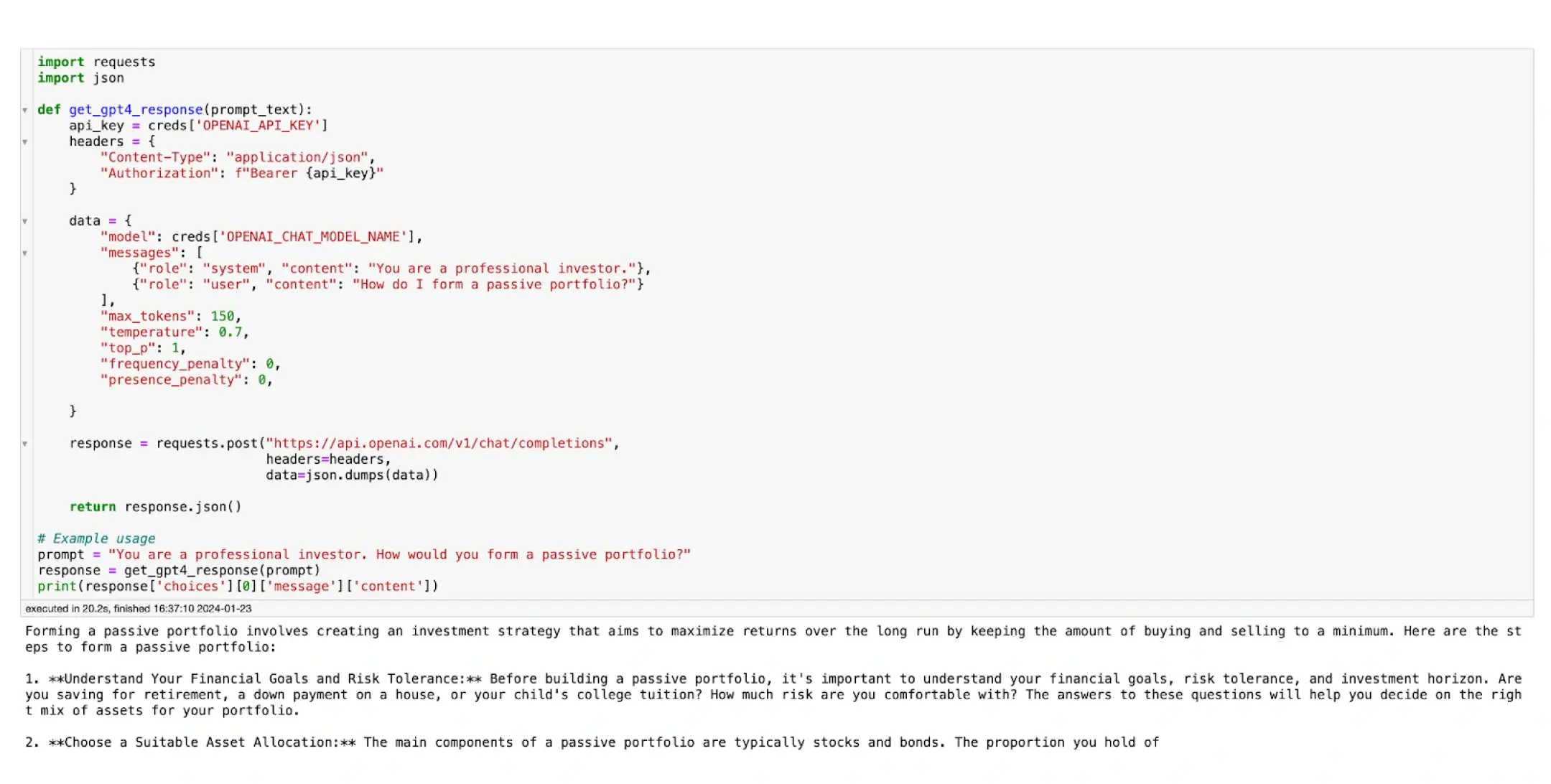

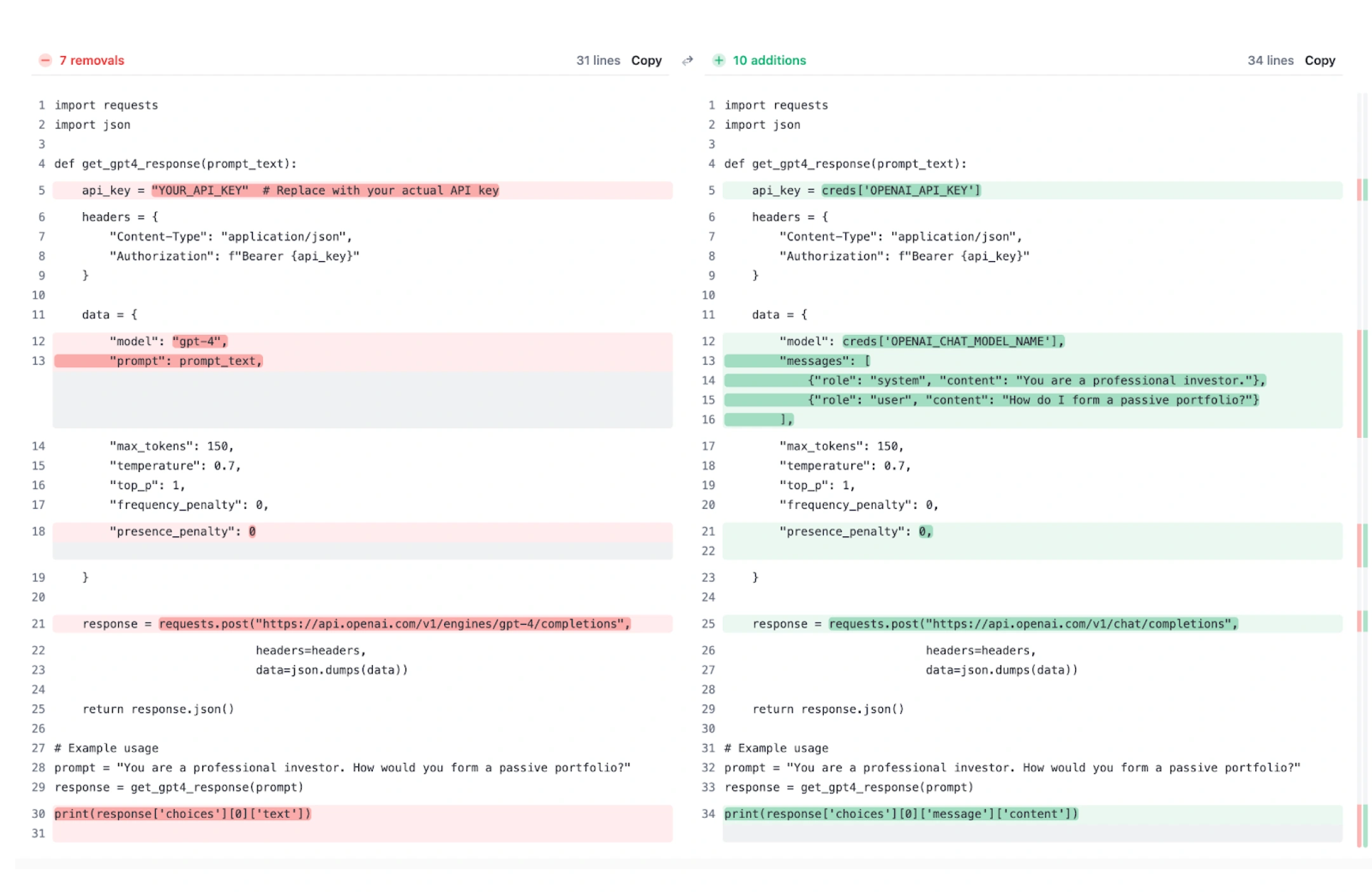

Here is the proper code:

If we compare the proper code to the one generated with ChatGPT, there is quite a difference. This modification requires someone with an engineering background — in our experiment, we haven’t made the life of the engineer easier.

Our revised starting point: In the GPT Store, we also have API Docs, which self-identifies as “OpenAI, GPTS, Documentation, and Cookbook.”

We tested this tool with a simple request to find out whether the response of this custom GPT is a working code:

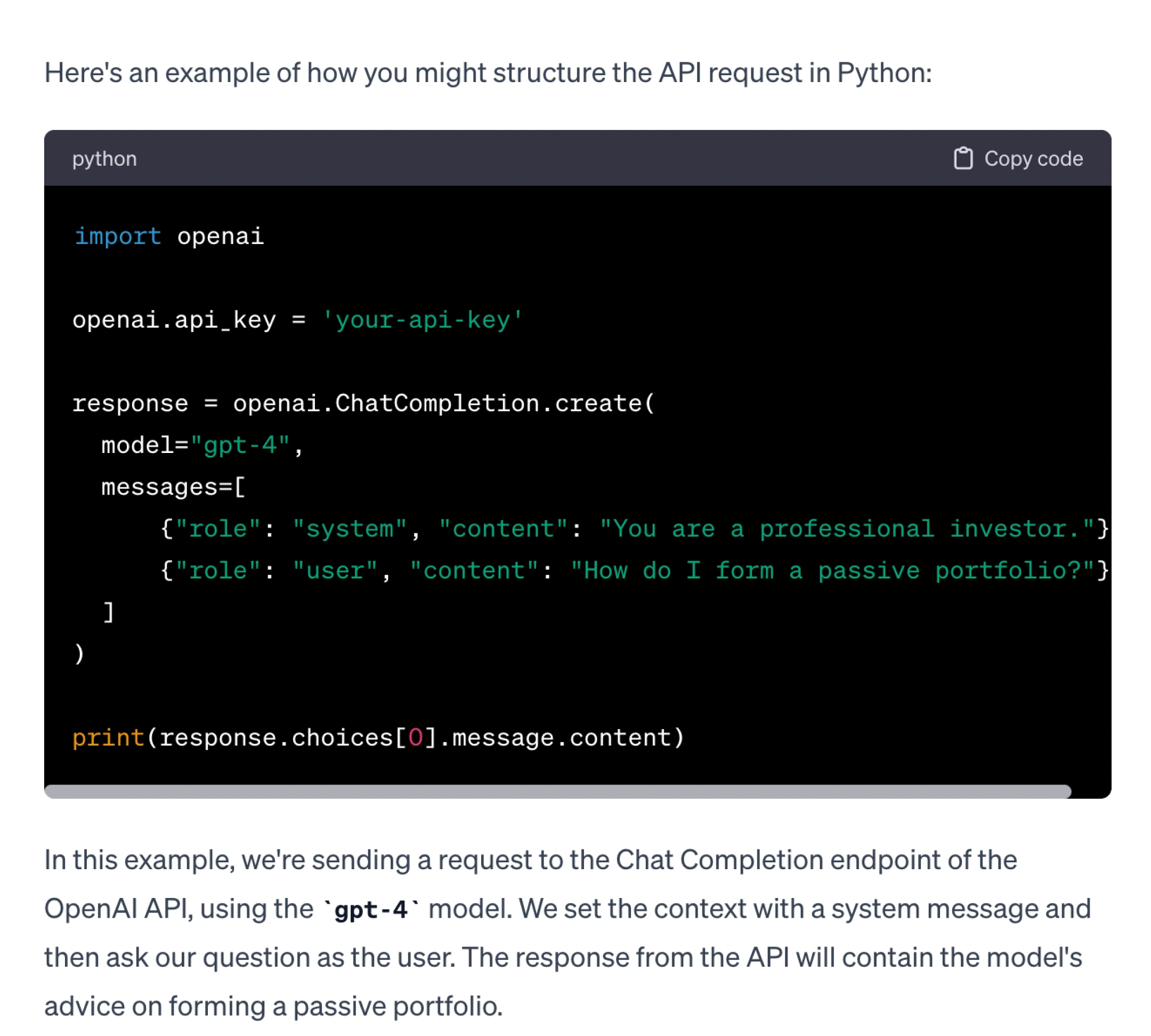

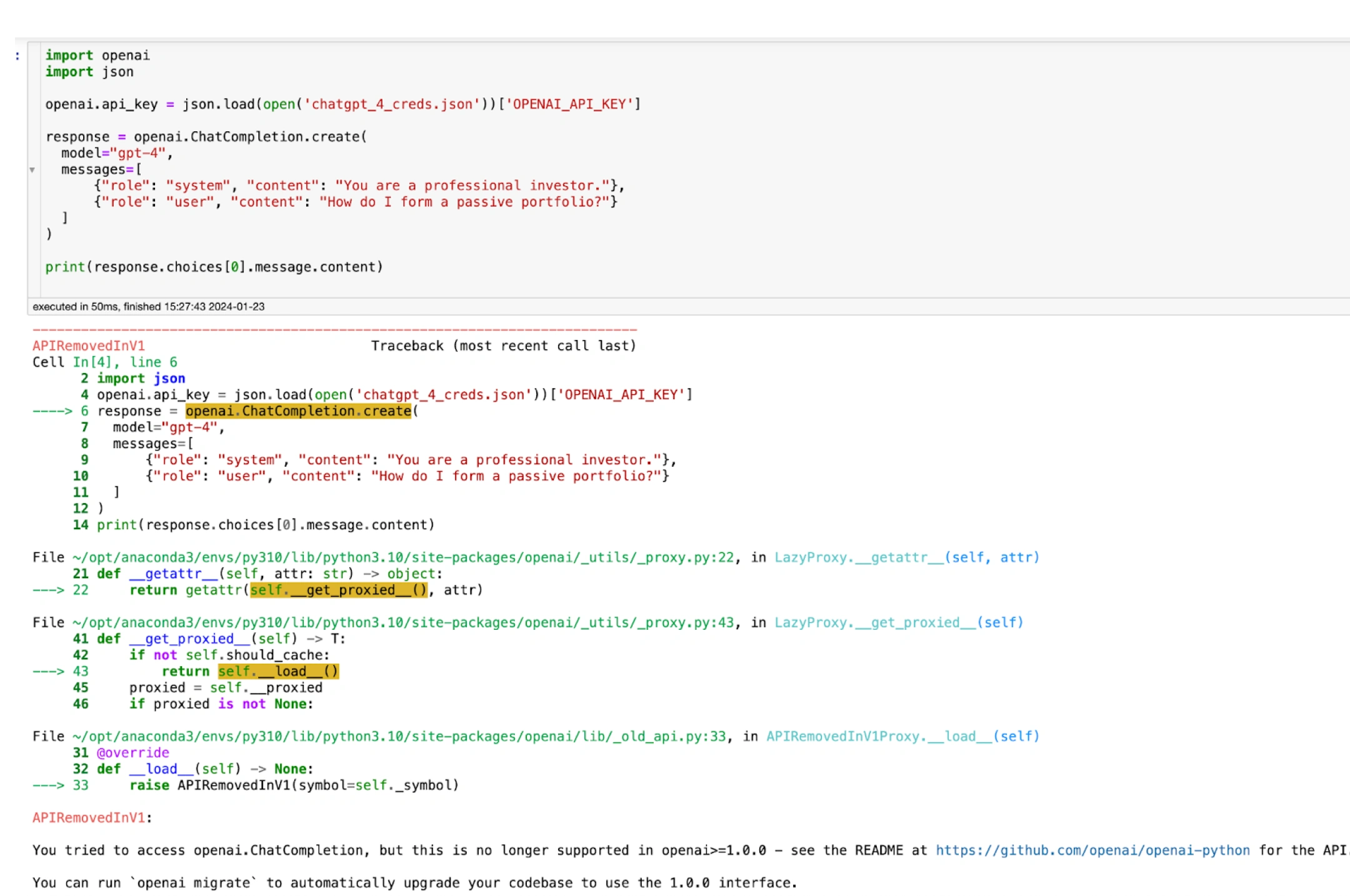

The results of testing this code:

Our evaluation: Not exactly incorrect, the custom GPT provided code for an older version of OpenAI API. There might be a lag in this tool aligning with recent updates in OpenAPI, therefore impacting the results. The working code will look like the following, where creds variable contains the result of reading a file with credentials in the following format:

{

"OPENAI_API_KEY": "...",

"OPENAI_CHAT_MODEL_NAME": "..."

}

If we try we force API Docs to create the code for the modern version of API, we receive the same code as before:

What now?

Our conclusion: The top two API creation tools that leverage ChatGPT when we made these experiments don’t work properly, nor do they provide proper code based on the latest version of OpenAI.

Our proposal: As the custom GPTs we reviewed also didn’t work, Proxet will attempt to develop a tool ourselves, with usage of langchain and tools like Agents, RAG, and plugging web search to an LLM.

We’re eager to share those additional findings; we’ll make our experiments and results available in a forthcoming article.