Written by Daria Dremliuga

The need for prototyping in data-driven projects

In today’s hyper-competitive environment, being data-driven is no longer optional—it’s a strategic imperative. Organizations in industries like real estate investment management handle immense volumes of data generated by disparate sources, which must be harmonized across multiple regions and systems.

Data silos and gaps, poor data quality, and misalignment among stakeholders can significantly delay progress or compromise the success of large-scale initiatives. That’s where prototyping enters the picture.

Prototyping helps organizations test assumptions, validate data mapping, and gather end-user feedback in a controlled, cost-effective manner.

By building a small-scale proof of concept (POC)—whether with Power BI, Excel, or another rapid prototyping tool—teams can uncover hidden data quality issues and refine project requirements early, saving significant time and resources.

Defining prototyping for data products

Prototyping in this context means creating quick, often disposable reports or dashboards to verify data availability, data relationships, and stakeholder requirements. It’s a learning tool, not a finished product. By iterating rapidly, data teams can validate their direction, ultimately saving time and budget.

As Microsoft explains, “A prototype—or proof of concept (POC)—is a Power BI solution that's intended to address unknowns and mitigate risk.” This perspective highlights how prototyping also helps organizations identify and manage potential challenges early in the project lifecycle.

Introducing the experiment

This blog post shares an experiment comparing two approaches for creating a Variance financial report prototype in real estate asset management—highlighting the benefits of Power BI.

The goal is to demonstrate how Power BI compares to Excel in terms of:

- Ease of use

- Time efficiency

- Effectiveness at uncovering data gaps

- Scalability for additional scenarios

We’ll begin with a brief look at the original Excel-based POC before walking through the Power BI approach. Along the way, we highlight key insights for decision-makers, business analysts, and data professionals seeking to mitigate risks in large-scale data platform initiatives by starting with smaller-scale prototypes.

Experiment setup

The real-world setting for this experiment involved a multibillion-dollar real estate investment management firm’s Asset Management team. They needed a Variance financial report—an essential tool for assessing performance, managing cash flow, and planning operational improvements across a large, globally distributed portfolio.

In our actual project, we conducted the POC using Excel. However, for the purposes of this blog post, we will hypothetically utilize Power BI to replicate and enhance the POC process, demonstrating its potential advantages.

Brief look at the original Excel-based POC

Objective:

Validate the feasibility of producing two critical financial reports—Variance report its subledger report on Service Charges, which provides a detailed breakdown of specific charges incurred across properties.

Key questions / assumptions:

- Does the data exist? Do the provided Budget and Actuals Excel files contain all the necessary information for generating the two reports?

- What is the quality of the data? Are there missing, incomplete, or inconsistent data points that could hinder accurate reporting?

- Can the data be mapped effectively? Is it feasible to map Budget and Actuals data in a way that Budget vs. Actuals accurately compares planned versus actual expenditures?

- What are the next steps? How should the client address data challenges and prepare for a larger data platform initiative if the POC confirms data feasibility?

Approach:

In the original POC, Excel was used as the primary tool. Data from Budget and Actuals Excel workbooks was mapped to corresponding line items in the Variance report and Service Charges reports using cross-sheet reference formulas. While this method confirmed major data gaps, expanding the analysis beyond a single asset or period required significant effort. Although adequate as a proof of concept, the process was tedious and required frequent manual re-checks. Significant time was needed to update mappings when the client made changes.

Why Power BI?

While Excel can handle basic tasks like summing figures and producing static reports, Power BI is designed to significantly outperform it for prototyping reports with complex data mappings. It seamlessly integrates multiple data sources and efficiently manages large datasets.

Power BI also automates data modeling and transformations, plus it enables interactive, real-time visualizations for deeper insights. Additionally, its Copilot and AI-driven tools further enhance the process by automating data preparation, generating actionable insights, and accelerating smarter, faster prototyping.

Step-by-step guide to the Power BI prototype

Transforming the source files

- Pre-cleaning in Excel: When dealing with poorly structured, unoptimized data files, it's critical to perform pre-cleaning before importing them into Power BI. Many source files were simply too messy, oversized, or inconsistent to work efficiently with Power BI's Power Query without prior intervention. By taking the time to clean and prepare data, we lay the groundwork for smoother prototyping and analysis. Plus, this was a prototype, not a production pipeline with formal ETL.

- Select only needed columns: Rather than feed Power BI an unwieldy “monster” workbook, we copied only the relevant columns—such as account names, amounts—into a new table structure for each dataset (Budget Data, Actuals Data). This not only improves performance but also simplifies debugging and reduces the cognitive load when working in Power BI, especially for prototypes.

- Clean up data: We addressed inconsistencies in data (e.g., stemming from the source file’s export process from legacy portfolio management system) by removing blank rows and correcting column names where needed, thereby creating a cleaner, more consistent dataset.

- Generate fictional data: To protect client confidentiality, we replaced sensitive data—such as amounts, asset identifiers, and asset data—with generated fictional values. Account names and structural details were preserved to ensure the prototype remained aligned with the real-world context while safeguarding proprietary information.

- Add asset lookup table: We added new columns for period and asset in data tables to lay the groundwork for multi-asset, multi-period analysis. Then, we created an Asset Lookup table which contained the list of assets with minimal descriptive columns to enable more visualisations, slicers later on.

- Standardize hierarchies: The Variance report specification (Excel file) contained a four-level account hierarchy, all in one column. We prepped it by referencing each level in separate columns. This will help with accurate aggregation in reports and drill-down.

- Data mapping: To align Budget Data and Actual Data with the Variance and Subledger reports, we manually mapped accounts to report line items using unique IDs. This included adding IDs to the report specification tables and embedding them into the data tables. Some accounts mapped to multiple line items, while others remained unmapped intentionally.

- Document transformations. Throughout this process, we kept a log of the changes made—such as which columns were retained, how the data was restructured, and any manual adjustments.

- Import into Power BI (Power Query):

- Data loading: Each pre-cleaned Excel table was loaded into Power Query, which automatically detected data types, and manual adjustment of currency formats for amount columns, ensuring better accuracy in calculations and visualizations.

- Unpivoting: Where budget data had multiple columns with budget amounts for each period, we unpivoted them.

- Data quality checks and data profiling: We conducted basic validation checks. We flagged any mismatch, such as negative values for gains where we expected positives. Power BI includes data profiling features in Power Query, which provide visual and statistical insights into datasets.

- Date table: From the distinct values in the “Period” column, we created a Date Table inside Power BI. This Date Table was essential for enabling time intelligence functions.

Data modeling and Data Analysis Expressions (DAX)

DAX is a formula language used in Microsoft tools like Power BI and Power Pivot in Excel. It uses functions and operators to build formulas and expressions for data models.

1. Data modeling:

- Table relationships: Power BI automatically established one-to-many relationships between the “Report Line Items” table and both budget and actuals fact tables. No fixes needed.

- Hierarchies and sorting: established a four-level hierarchy for Variance report line items (Level 1, Level 2, etc.), geo and date hierarchies. Implemented a custom sort to ensure the line items hierarchy displays in the desired order.

- Additional tables: When working on DAX measures and visualisations there was a need for two additional tables that were added directly in Power BI. The first was the report type - Variance or Service Charges, which allowed us to build a stacked bar chart for data gaps visualisations. Second was the Exceptions table that detailed report line item IDs that were not applicable if the asset belonged to a commercial real estate type.

.webp)

2. DAX measures:

DAX measures are the specific calculations to gain insights from your data within tools like Power BI.

- Variance measure and KPI’s: We defined basic measures like Variance = (Actuals – Budget). Also, Variance report contained other KPIs that needed to be calculated and displayed as card visual.

- Missing data: DAX measures were used to analyse data gaps and apply filters based on whether the account was mapped to report.

- Copilot: We used Copilot for both data gaps DAX and KPIs DAX measures, which allowed us to speed things up.

Building Reports

Pages & Navigation: We created multiple pages:

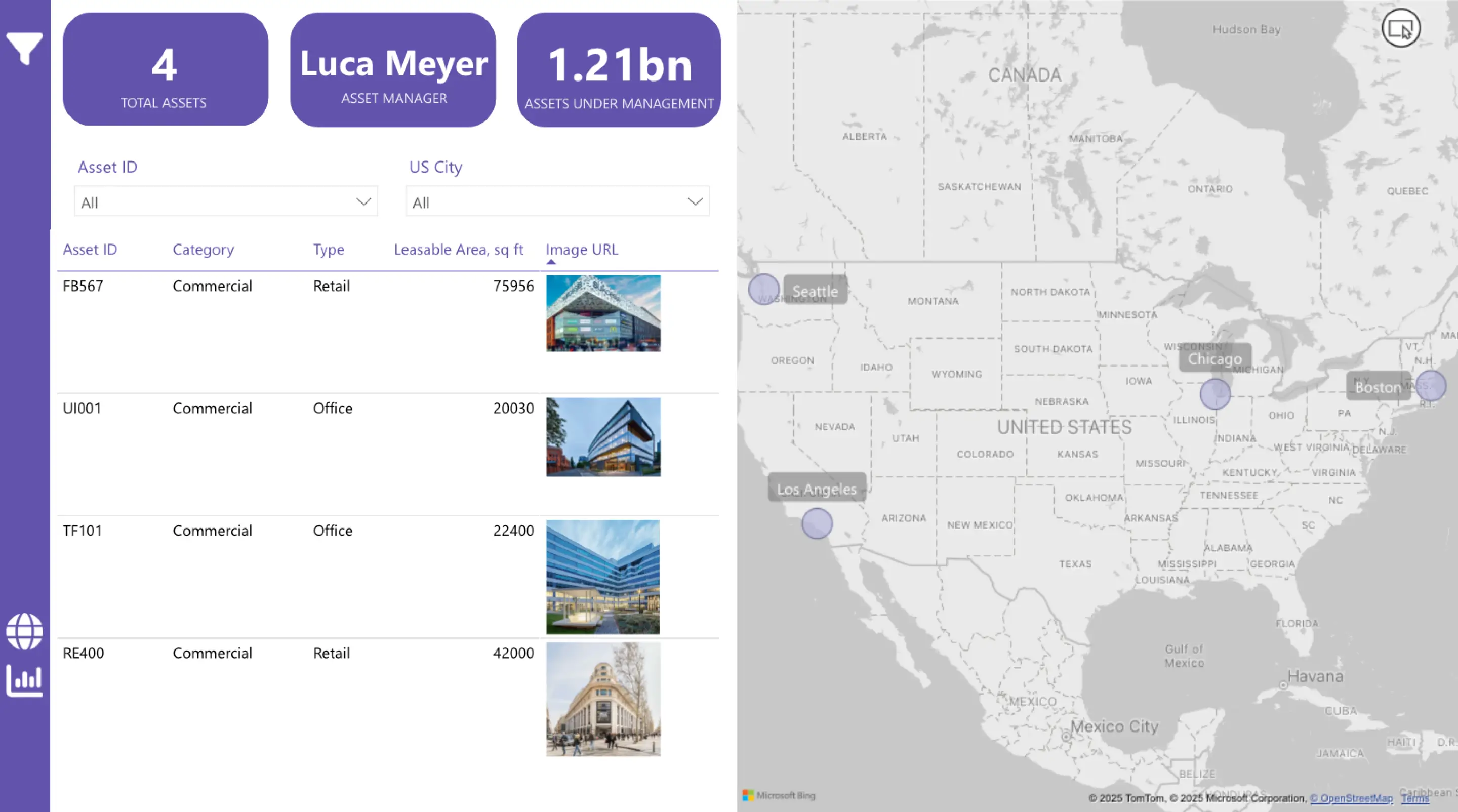

- Portfolio Overview (with a map visual for all assets).

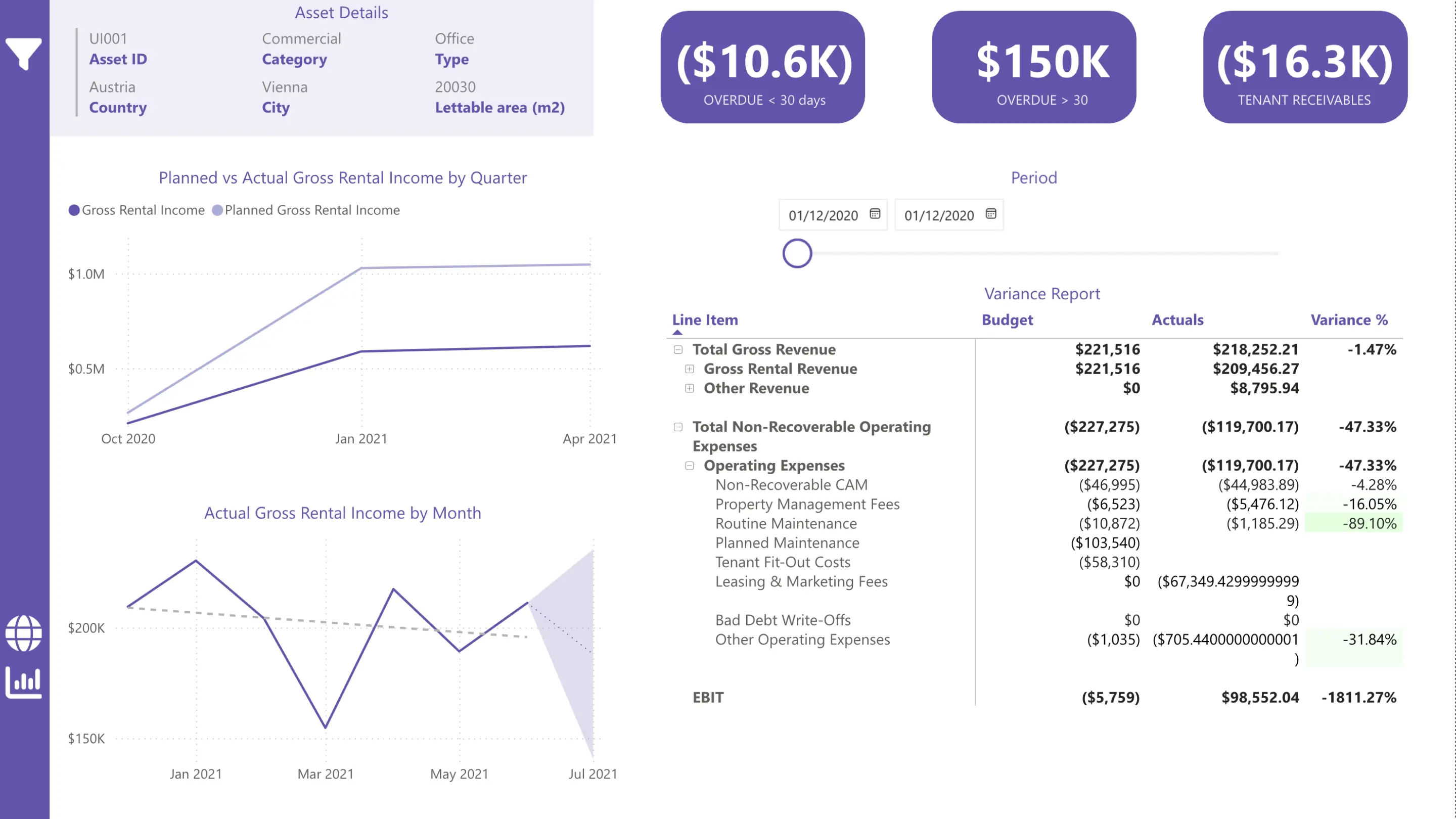

- Variance (using a matrix, cards, filters).

- Service Charges (subledger-level detail).

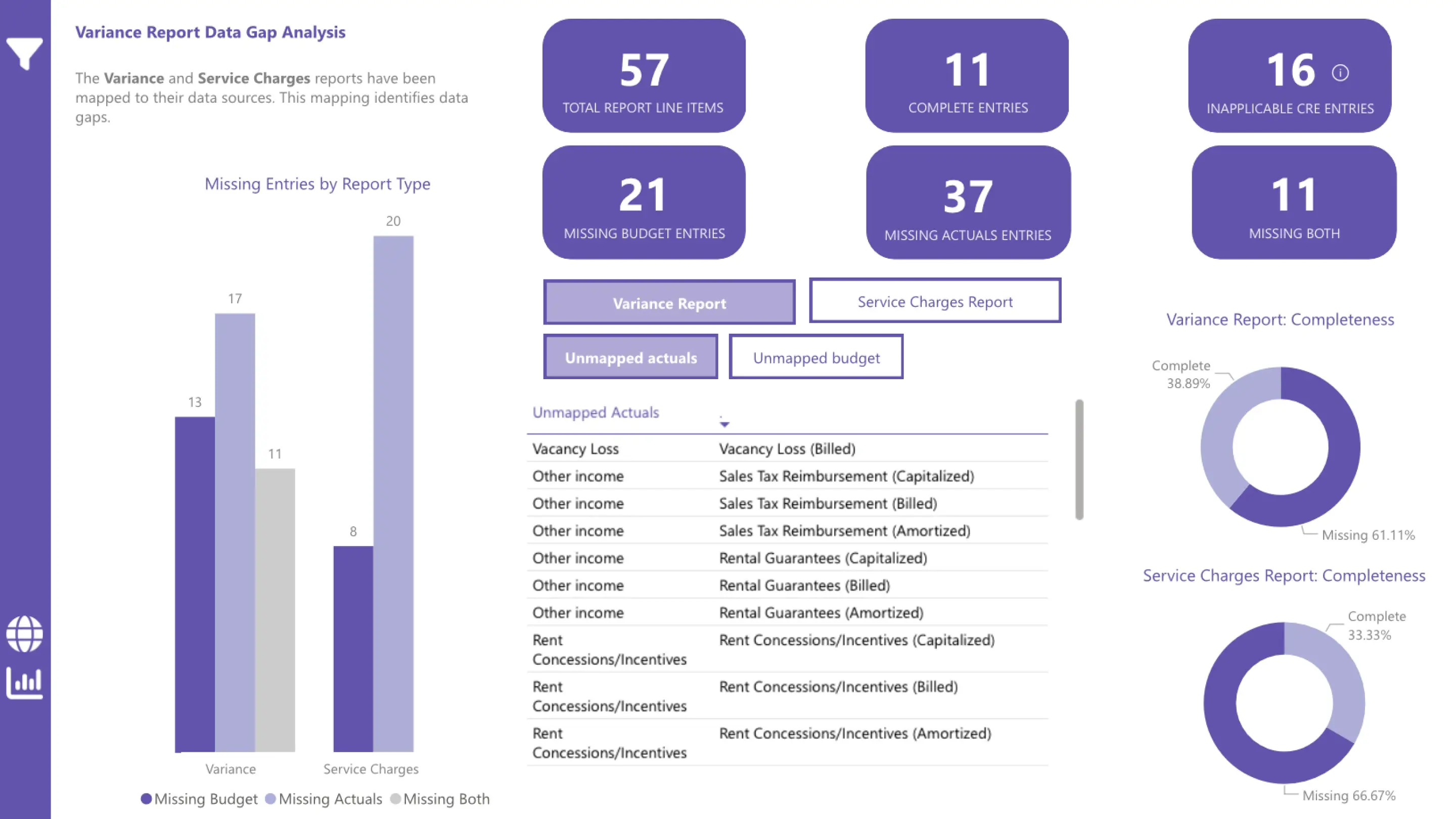

- Data Gaps Analysis (highlighting missing or incomplete data uncovered).

Budget vs. Actuals Matrix

- Matrix Visual: By dropping the four-level hierarchy into rows and adding Amounts from Budget and Actuals tables, the matrix allowed drill-down from parent to child accounts and it calculated EBIT at the bottom. Variance measure was also included and had different background colors depending on values.

- Validation: We cross-checked the matrix totals against the original Excel POC to confirm consistency.

- KPIs and Slicer: Variance report page also included several KPIs cards and slicers and filters, like time period, asset ID, and region. With a single click, users could filter the entire report.

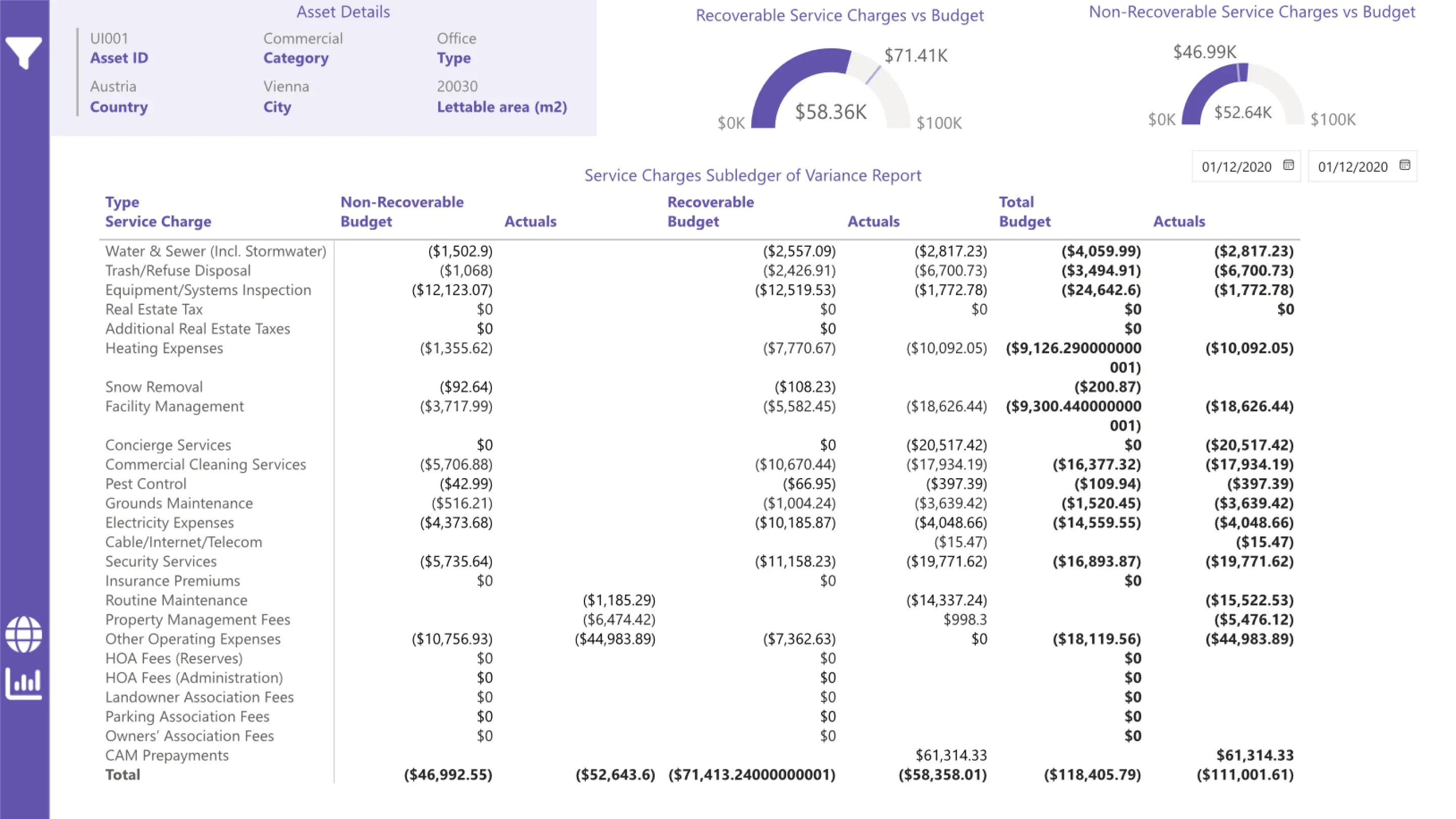

Service charges subledger matrix

Separate page: We built a second Power BI page showing subledger detail for service charges. It was similar to the main report. End users could drill through from the main Budget vs. Actuals matrix to see line-item detail on utilities, maintenance, etc.

Data gaps analysis

Data gaps report: We’ve built a dedicated report page to analyze missing data, the progress of each report through its “ideal” state and a few visualizations and KPIs. It also included a table with the full list of accounts that had missing values on budget or actuals per report type; Copilot helped with this report.

Map overview and navigation

Map visual: Using latitude-longitude in our Asset Lookup table, the first Power BI page showed each asset’s location. Clicking on an asset opened a drill-through to the Budget vs. Actuals page. The custom tooltip was also developed to provide asset details if hovered over Assets on the map.

Final touches and future enhancements

- Role-based security: We experimented with row-level security rules so that Asset Managers would see only their assigned properties, while senior executives could view the entire portfolio.

- AI and Copilot: We briefly explored Power BI’s Copilot and AI capabilities, like Key Influencers, and leveraged Copilot suggestions to speed up DAX measures creation and for inspiration for report building.

- Real-time data connectors: Although our prototype used Excel files, in another project Power BI could connect directly to a database or Sharepoint Folder to update data dynamically with no need to re-connect to a new Excel.

- Publishing to Power BI service: This prototype can be published and be accessible via direct link for sharing with stakeholders.

- Prototype testing: Ensure data accuracy through comprehensive testing, even in the prototype phase.

Comparison

Key Insights from the Experiment

- Power BI immediately surfaced data gaps (e.g., untracked accounts, missing periods, misaligned hierarchies) by enabling quick comparisons, which would have taken far more time in Excel.

- Interactivity and the ability to drill down or filter by asset or period would offer “a-ha” moments for stakeholders, who could see how issues varied across the portfolio.

- Excel’s edge is familiarity: for small or extremely simple prototypes, Excel might suffice. But once you scale to multiple assets or attempt deeper analysis, Power BI’s advantages become evident.

- Scalability matters: Excel can serve as a simple POC tool, but large-scale data inevitably bogs it down, making it impractical for a multi-asset, multi-region scenario.

- Semi-automated data mapping with Fuzzy Merge: Power Query's Fuzzy Merge feature facilitates automatic data mapping, addressing variations across different systems. It effectively handles discrepancies such as mismatched ID formats or manually entered data (e.g., property names), enabling a unified view. When multiple matches occur, Power BI retains all options, allowing for manual review and selection to ensure data accuracy.

Conclusion

In conclusion, successfully navigating large-scale data initiatives hinges not just on technical execution, but fundamentally on achieving clarity and alignment when initial requirements may be ambiguous. This experiment underscores that an iterative prototyping approach, specifically leveraging Power BI, proves invaluable. By providing a tangible, explorable model, Power BI prototypes facilitate clearer communication, generate focused end-user feedback, and help build a shared understanding—those crucial "a-ha" moments —early in the process.

To further enhance this process, embracing AI tools like Copilot can significantly accelerate development cycles, from generating DAX measures to identifying data anomalies. Finally, empowering business analysts with strong Power BI skills is essential, ensuring the organization can fully harness these capabilities to bridge the gap between data potential and stakeholder value.